Duplicate Detection is a crucial part of data cleansing, as duplicate entries cause a number of issues in data analytics and business operations. The pipeline above, is a typical process flow used to tackle this issue. The 2nd and 3rd steps require record pair comparisons, which use similarity measures, such as Levenshtein and Jaro-Winkler. In this thesis we will implement or imitate such measures, in the GPU environment, and systematically evaluate the advantages of migrating from CPU to the graphical equivalent.

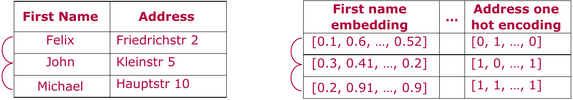

A record could be represented as a vector of string or numerical values, as you can see in the examples of the tables below. Numerical values are more suitable for GPUs, since GPU vector comparisons are very fast, and orders of magnitude faster than in CPU. Therefore we want to examine the benefits of using such vectors, with manually crafted features, in comparison with the similarity measures.